QNAP RAM-32GDR4ECK0-SO-3200 QNAP 32GB DDR4 RAM 3200MHz memory module 1 x 32 GB ECC

QNAP 32GB DDR4 SDRAM Memory Module - For NAS Server - 32 GB - DDR4-3200/PC4-25600 DDR4 SDRAM - 3200 MHz - ECC - 260-pin - SoDIMM

QNAP RAM-8GDR4K0-SO-3200 QNAP 8GB DDR4 RAM 3200 MHz memory module 1 x 8 GB

QNAP 8GB DDR4 SDRAM Memory Module - For NAS Server - 8 GB - DDR4-3200/PC4-25600 DDR4 SDRAM - 3200 MHz - 260-pin - SoDIMM

QNAP RAM-16GDR4K0-SO-3200 QNAP 16GB DDR4 RAM 3200 MHz SO-DIMM memory module 1 x 16 GB

QNAP 16GB DDR4 SDRAM Memory Module - For NAS Server - 16 GB - DDR4-3200/PC4-25600 DDR4 SDRAM - 3200 MHz - 260-pin - SoDIMM

QNAP RAM-8GDR4ECK0-SO-3200 QNAP 8GB DDR4 RAM 3200 MHz memory module 1 x 8 GB ECC

QNAP 8GB DDR4 SDRAM Memory Module - For NAS Server - 8 GB - DDR4-3200/PC4-25600 DDR4 SDRAM - 3200 MHz - ECC - 260-pin - SoDIMM

QNAP RAM-4GDR4A0-UD-2400 QNAP RAM-4GDR4A0-UD-2400 memory module 4 GB 1 x 4 GB DDR4 2400 MHz

qnap 4 Gb 288-pin U-dimm Ddr4 Ram Module

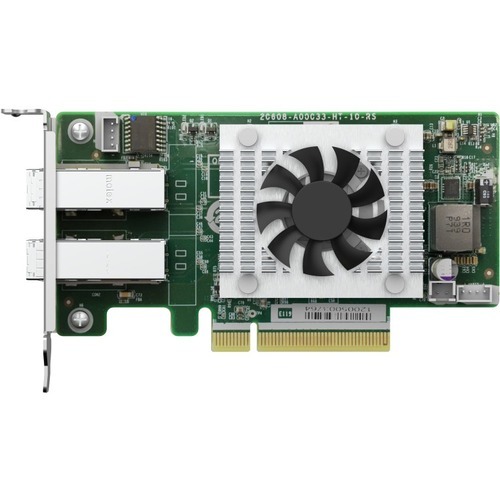

QNAP MUSTANG-F100-A10-R10 QNAP Mustang-F100 interface cards/adapter Internal

As QNAP NAS evolves to support a wider range of applications (including surveillance, virtualization, and AI) you not only need more storage space on your NAS, but also require the NAS to have greater power to optimize targeted workloads. The Mustang-F100 is a PCIe-based accelerator card using the programmable Intel® Arria® 10 FPGA that provides the performance and versatility of FPGA acceleration. It can be installed in a PC or compatible QNAP NAS to boost performance as a perfect choice for AI deep learning inference workloads.OpenVINO™ toolkitOpenVINO™ toolkit is based on convolutional neural networks (CNN), the toolkit extends workloads across Intel® hardware and maximizes performance.It can optimize pre-trained deep learning model such as Caffe, MXNET, Tensorflow into IR binary file then execute the inference engine across Intel®-hardware heterogeneously such as CPU, GPU, Intel® Movidius™ Neural Compute Stick, and FPGA.Get deep learning acceleration on Intel-based Server/PCYou can insert the Mustang-F100 into a PC/workstation running Linux® (Ubuntu®) to acquire computational acceleration for optimal application performance such as deep learning inference, video streaming, and data center. As an ideal acceleration solution for real-time AI inference, the Mustang-F100 can also work with Intel® OpenVINO™ toolkit to optimize inference workloads for image classification and computer vision.QNAP NAS as an Inference ServerOpenVINO™ toolkit extends workloads across Intel® hardware (including accelerators) and maximizes performance. When used with QNAP’s OpenVINO™ Workflow Consolidation Tool, the Intel®-based QNAP NAS presents an ideal Inference Server that assists organizations in quickly building an inference system. Providing a model optimizer and inference engine, the OpenVINO™ toolkit is easy to use and flexible for high-performance, low-latency computer vision that improves deep learning inference. AI developers can deploy trained models on a QNAP NAS for inference, and install the Mustang-F100 to achieve optimal performance for running inference.

QNAP QXP-820S-B3408 QNAP QXP-820S-B3408 interface cards/adapter Internal SAS

The SAS HBA PCIe Expansion Card is designed for expanding the capacity of a QNAP NAS by connecting it to TL SAS JBOD storage enclosures and REXP expansion units. Multiple expansion units can be daisy-chained via high-speed mini SAS HD cables to expand the total disk capacity up to 4.6PB to meet growing data storage needs. SAS-based storage solutions can assist businesses in creating highly-reliable IT environments for virtualization, surveillance, Big Data, video editing and TV broadcast storage.Use high-performance and high-availability SAS drivesCompared with SATA, SAS enables low-latency, power-efficient, and IOPS-optimized storage capabilities. Each SAS port combines four 12Gb/s SAS channels, delivering up to 48Gb/s per host connection.Expand your total disk capacity up to 4.6PBA NAS with a SAS expansion card can be daisy-chained with up to 16 devices, including TL SAS JBOD storage enclosures and REXP expansion units. This provides to potential to store up to 4.6PB (calculated using 18TB drives in sixteen 16-bay enclosures).Attain faster backup and uninterrupted services with flexible port configurationSAS expansion cards can be flexibly configured to fit various usage applications. By managing multipath or link aggregation with NAS to expansion units, data transfer loads are effectively balanced, and you can avoid interrupting system services and prevent backup failures.

Get a Quote

Item(s) added to cart

Netgear GS105E-200NAS ProSafe Plus Switch, 5-Port Gigabit Ethernet - 5 Ports - 2 Layer Supported - Wall Mountable - Lifetime Limited Warranty-None Listed Compliance

MFR: Netgear, Inc

Qty: 1

Part #: GS105NA